The rapid advancement of artificial intelligence presents a profound and complex challenge to Indigenous communities across Australia, raising critical questions about data sovereignty, cultural appropriation, and the very nature of consent. This warning comes from Emma Garlett, a prominent Indigenous lawyer and policy expert, who argues that without immediate and deliberate action, AI systems risk perpetuating historical injustices by exploiting Indigenous knowledge and data.

The Core of the Problem: Data, Consent, and Exploitation

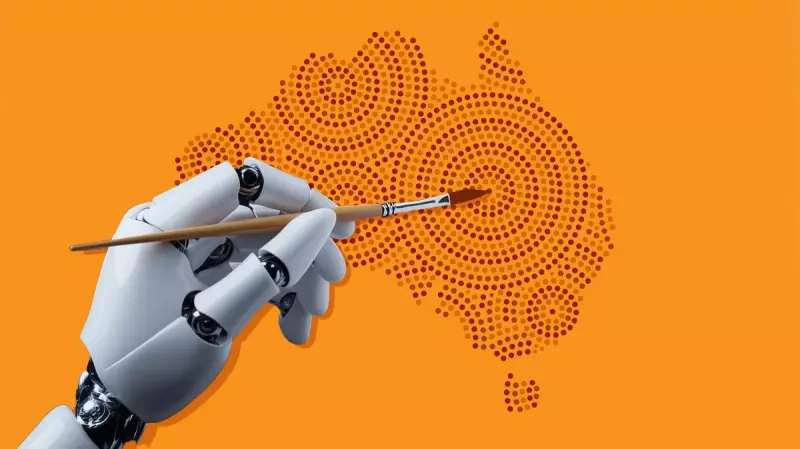

Emma Garlett, a Noongar woman and a respected voice in technology policy, highlights a central conflict. AI models are trained on vast datasets scraped from the internet, which often include Indigenous cultural expressions, languages, stories, and artistic works. This harvesting frequently occurs without the free, prior, and informed consent of the traditional owners. Garlett emphasises that this process mirrors colonial patterns of extraction, where resources are taken without permission and for the benefit of others.

The issue extends beyond simple copyright infringement. It strikes at the heart of Indigenous data sovereignty – the right of Indigenous peoples to govern the collection, ownership, and application of their data. When AI uses Indigenous cultural intellectual property to generate content or inform decisions, it can distort, commodify, and decontextualise sacred knowledge. Garlett points out that this not only disrespects cultural protocols but can also lead to the creation of inaccurate or harmful representations.

Real-World Risks and Cultural Harm

The potential for harm is not theoretical. Garlett outlines several tangible risks. AI-generated art could flood the market, devaluing the work of authentic Indigenous artists and confusing consumers about its origins. Language models trained on fragmented or incorrect sources could propagate misinformation about cultural practices. Furthermore, AI systems used in areas like resource management or land use planning could make recommendations that ignore deep cultural connections to Country, leading to poor outcomes for both community and environment.

"We're seeing our stories, our art, our languages being fed into these machines," Garlett states, highlighting the feeling of powerlessness this can create. The development cycle is so fast and opaque that communities are often unaware their cultural assets are being used until after the fact, leaving them with little recourse.

A Path Forward: Indigenous-Led Solutions and Ethical Frameworks

Despite the significant challenges, Emma Garlett is advocating for proactive, Indigenous-led solutions. She insists that Indigenous communities must be at the decision-making table from the very beginning of AI development, not consulted as an afterthought. This means involving them in the design of datasets, the development of algorithms, and the creation of governance models.

Key to this approach is the development of robust ethical frameworks that enforce true consent and ensure benefit-sharing. Garlett calls for technological and legal mechanisms that allow communities to control how their data is used and to receive fair compensation when it contributes to commercial AI products. She also stresses the importance of digital literacy programs that empower Indigenous people to understand, engage with, and even build their own AI tools tailored to community needs and values.

The conversation initiated by Garlett is urgent and critical. As Australia and the world rush to embrace artificial intelligence, failing to address these issues risks building a future where technology amplifies existing inequalities and erodes the world's oldest living cultures. The challenge is profound, but with collaboration and respect, AI could also become a tool for preserving languages, sharing knowledge on Indigenous terms, and empowering communities.